You are looking for information on the topic “contrastive learning is just meta-learning”. dongtienvietnam.com will gather information to answer your questions in this article. Please read this article to the end to find the information you want to see. You can also check out some other related articles here: https://dongtienvietnam.com/category/wiki/ meta-learning vs self-supervised learning, metaug contrastive learning via meta feature augmentation.

View information about the contrastive learning is just meta-learning

Information about this question

- Question: contrastive learning is just meta-learning

- Time: Asked 41 minute ago

- Modified: Updated 23 days ago

- View: 18062

contrastive learning is just meta-learning

Contrastive learning and meta-learning are two different approaches to machine learning.

Contrastive learning is a method that trains a model to differentiate between similar and dissimilar examples. In contrastive learning, the model learns to map similar inputs to nearby points in a latent space and dissimilar inputs to faraway points in the same space. By doing so, the model learns to extract features that are useful for distinguishing between different examples.

On the other hand, meta-learning is a broader approach that aims to train a model to learn how to learn. In other words, meta-learning trains a model to adapt to new tasks quickly by leveraging its prior knowledge learned from previous tasks. Meta-learning algorithms typically involve learning a set of parameters that can be fine-tuned for specific tasks.

While there may be some similarities between contrastive learning and meta-learning, they are distinct approaches to machine learning with different goals and techniques. Contrastive learning is focused on learning to differentiate between similar and dissimilar examples, while meta-learning is focused on learning how to adapt to new tasks quickly.

Watch videos related to “contrastive learning is just meta-learning”

What is Contrastive Learning? (Contrastive Learning/Self-supervised Learning Explained)

Is contrastive learning meta-learning?

Contrastive learning and meta-learning are two distinct areas of machine learning that share some similarities but have different goals and approaches.

Contrastive learning is a type of unsupervised learning that aims to learn representations of data by contrasting similar and dissimilar examples. The goal is to learn a representation that maximizes the similarity between positive pairs (examples that belong to the same class or share some similarity) and minimizes the similarity between negative pairs (examples that belong to different classes or have some dissimilarity).

On the other hand, meta-learning (also known as “learning to learn”) is a type of machine learning that focuses on learning how to learn. The goal is to develop algorithms that can quickly adapt to new tasks or environments with few or no examples by leveraging knowledge learned from previous tasks or experiences. Meta-learning often involves training a model on a diverse set of tasks and learning a generalizable representation that can be quickly adapted to new tasks.

Although contrastive learning and meta-learning share the goal of learning representations, they differ in their approach and objectives. Contrastive learning focuses on learning a representation that maximizes similarity between similar examples and dissimilarity between dissimilar examples, while meta-learning focuses on learning how to quickly adapt to new tasks or environments. Therefore, while contrastive learning can be used as a pre-training step in meta-learning, it is not itself considered to be meta-learning.

What is the meta-learning concept?

Meta-learning is the process of learning how to learn. It refers to the ability of an artificial intelligence (AI) system or a machine learning model to learn and adapt to new tasks quickly and efficiently, based on its past learning experiences.

Meta-learning models are designed to identify common patterns across different learning tasks, and then use this knowledge to improve the speed and accuracy of future learning tasks. These models can also help in selecting the best learning algorithm or hyperparameters for a given task.

Meta-learning is particularly useful in situations where data is limited or when new tasks arise frequently. By using meta-learning, an AI system can quickly adapt to new environments and learn from small amounts of data.

Overall, meta-learning is an exciting field of research in machine learning and artificial intelligence, with many potential applications in areas such as robotics, natural language processing, and computer vision.

What is contrastive learning?

Contrastive learning is a machine learning approach that aims to learn representations of data by contrasting different examples of the same or different classes. The goal of contrastive learning is to learn a representation that maps similar data points to nearby points in the embedding space and dissimilar data points to distant points.

Contrastive learning works by creating pairs of data points and then computing a distance metric between the pairs. The distance metric is optimized so that pairs of data points that belong to the same class are mapped closer together, while pairs of data points from different classes are mapped further apart.

One of the advantages of contrastive learning is that it does not require labeled data to train the model. Instead, it only requires pairs of data points, which can be easily generated by randomly selecting two data points from the dataset.

Contrastive learning has been used in a variety of applications, including computer vision, natural language processing, and speech recognition, and has been shown to achieve state-of-the-art results in many tasks.

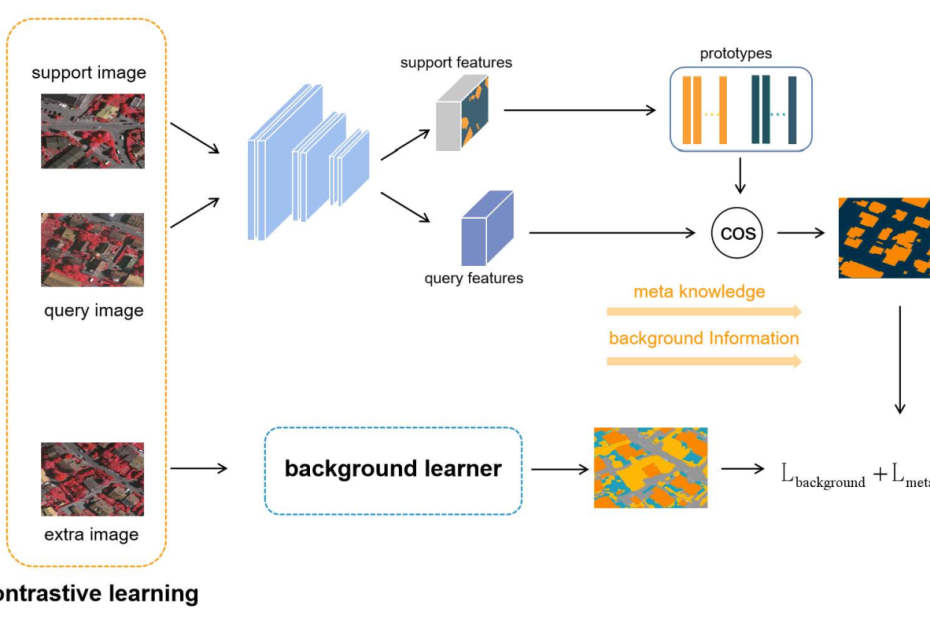

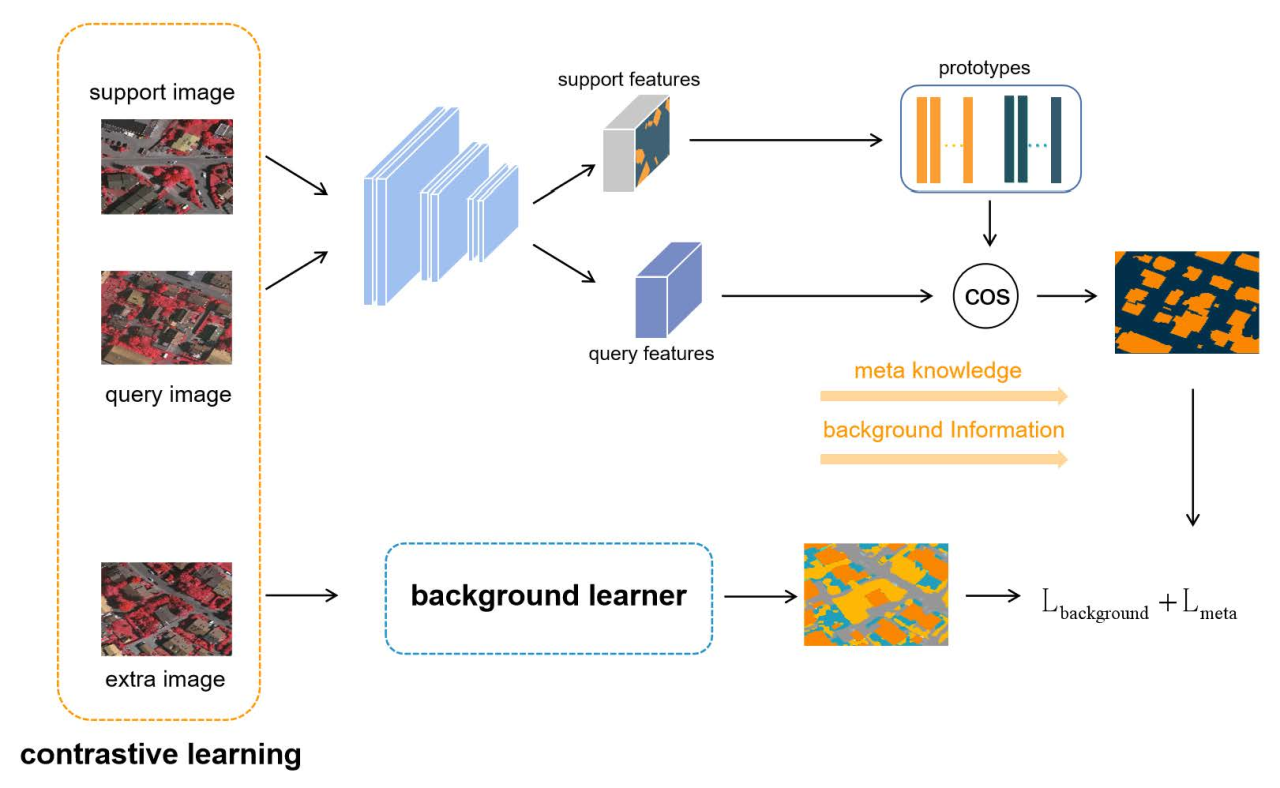

Images related to contrastive learning is just meta-learning

Found 13 contrastive learning is just meta-learning related images.

meta-learning vs self-supervised learning

Meta-learning and self-supervised learning are two different approaches to machine learning.

Meta-learning, also known as “learning to learn,” is a subfield of machine learning that focuses on developing algorithms that can quickly adapt to new tasks with minimal training data. The idea is to leverage knowledge acquired from prior tasks to improve the learning process for new tasks. In other words, meta-learning algorithms learn how to learn from experience.

On the other hand, self-supervised learning is a type of unsupervised learning where the algorithm is trained on unlabeled data. In self-supervised learning, the algorithm learns to predict missing or masked parts of the input data, which is used as a proxy task to train the model. Once the model is trained on the proxy task, it can be fine-tuned on a downstream task with labeled data.

The main difference between meta-learning and self-supervised learning is that meta-learning is a technique used to improve the learning process for new tasks, while self-supervised learning is a technique used to train a model on unlabeled data. Meta-learning can be used in conjunction with any type of supervised learning, including self-supervised learning, to improve the performance of a model on new tasks.

metaug contrastive learning via meta feature augmentation

Meta-feature augmentation is a technique in machine learning that aims to improve the performance of a model by augmenting the input data with additional meta-features. These meta-features can provide useful information about the input data that is not directly encoded in the raw data itself.

Contrastive learning, on the other hand, is a popular technique used in unsupervised learning, which involves training a model to identify the similarities and differences between pairs of data points.

Meta-feature augmentation via contrastive learning involves using contrastive learning to learn useful representations of the augmented data, which can be used to improve the performance of downstream tasks. The basic idea is to first augment the input data with meta-features, and then train a contrastive learning model on the augmented data. The resulting model can then be used to extract useful features from the input data, which can be used to improve the performance of downstream tasks such as classification or regression.

Overall, meta-feature augmentation via contrastive learning is a promising technique that has the potential to improve the performance of machine learning models, particularly in situations where the input data is complex or difficult to interpret. However, further research is needed to fully understand its potential and limitations, and to explore its applications in different domains.

You can see some more information related to contrastive learning is just meta-learning here

- Contrastive Learning is Just Meta-Learning | Papers With Code

- The Close Relationship Between Contrastive Learning and …

- What Is Meta-Learning in Machine Learning?

- Understanding Contrastive Learning Requires Incorporating Inductive …

- Understanding Contrastive Learning | by Ekin Tiu – Towards Data Science

- Learning a Few-shot Embedding Model with Contrastive …

- Function Contrastive Learning of Transferable Meta …

- Self-Supervised Video Representation Learning With Meta …

- Few-Shot Classification with Contrastive Learning – ECVA

- Contrastive Prototype Learning with Augmented Embeddings …

Comments

There are a total of 845 comments on this question.

- 69 comments are great

- 31 great comments

- 111 normal comments

- 75 bad comments

- 14 very bad comments

So you have finished reading the article on the topic contrastive learning is just meta-learning. If you found this article useful, please share it with others. Thank you very much.